The

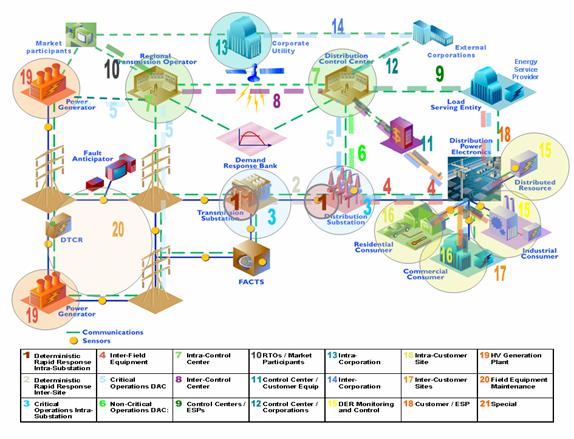

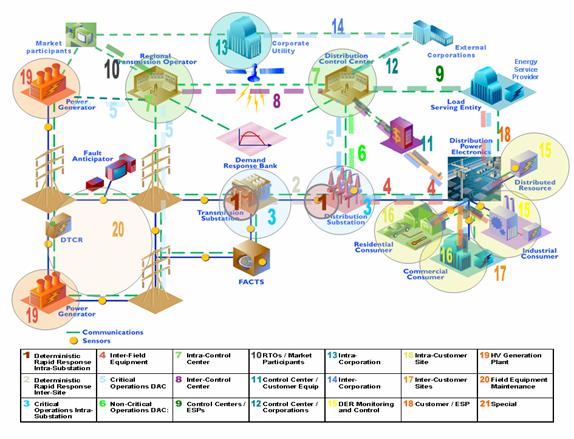

Integrated Energy and Communication Systems Architecture

Volume IV:

Technical Analysis

EPRI Project Manager

Joe Hughes

Cosponsor

Electricity

Innovation Institute Consortium for Electric Infrastructure to Support a

Digital Society (CEIDS)

EPRI • 3412

Hillview Avenue, Palo Alto, California 94304 • PO Box 10412, Palo Alto,

California 94303 • USA

800.313.3774 • 650.855.2121 • askepri@epri.com • www.epri.com

DISCLAIMER OF WARRANTIES AND LIMITATION OF LIABILITIES

THIS DOCUMENT WAS

PREPARED BY THE ORGANIZATION(S) NAMED BELOW AS AN ACCOUNT OF WORK SPONSORED OR COSPONSORED BY THE ELECTRIC

POWER RESEARCH INSTITUTE, INC. (EPRI). NEITHER EPRI, ANY MEMBER OF EPRI, ANY

COSPONSOR, THE ORGANIZATION(S) BELOW, NOR ANY PERSON ACTING ON BEHALF OF ANY OF THEM:

(A) MAKES ANY

WARRANTY OR REPRESENTATION WHATSOEVER, EXPRESS OR IMPLIED, (I) WITH RESPECT TO

THE USE OF ANY INFORMATION, APPARATUS, METHOD, PROCESS, OR SIMILAR ITEM

DISCLOSED IN THIS DOCUMENT, INCLUDING MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE, OR (II)

THAT SUCH USE DOES NOT INFRINGE ON OR INTERFERE WITH PRIVATELY OWNED RIGHTS,

INCLUDING ANY PARTY'S INTELLECTUAL PROPERTY, OR (III) THAT THIS DOCUMENT IS SUITABLE TO ANY

PARTICULAR USER'S CIRCUMSTANCE; OR

(B) ASSUMES

RESPONSIBILITY FOR ANY DAMAGES OR OTHER LIABILITY WHATSOEVER (INCLUDING ANY

CONSEQUENTIAL DAMAGES, EVEN IF EPRI OR ANY EPRI REPRESENTATIVE HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH

DAMAGES) RESULTING FROM YOUR SELECTION OR USE OF THIS DOCUMENT OR ANY

INFORMATION, APPARATUS, METHOD, PROCESS, OR SIMILAR ITEM DISCLOSED IN THIS

DOCUMENT.

ORGANIZATIONS THAT PREPARED THIS DOCUMENT

General Electric Company led by

GE Global Research (Prime Contractor)

Significant Contributions made by

EnerNex Corporation

Hypertek

Lucent Technologies (Partner)

Systems Integration Specialists Company, Inc.

Utility Consulting International (Partner)

ORDERING INFORMATION

Requests for

copies of this report should be directed to EPRI Orders and Conferences, 1355

Willow Way, Suite 278, Concord, CA 94520. Toll-free number: 800.313.3774, press 2, or internally x5379; voice: 925.609.9169; fax: 925.609.1310.

Electric

Power Research Institute and EPRI are registered service marks of the Electric

Power Research Institute, Inc. EPRI. ELECTRIFY THE WORLD is a service mark of

the Electric Power Research Institute, Inc. All other trademarks are the

property of their respective owners.

Copyright ©

2002, 2003, 2004 Electric Power Research Institute, Inc. All rights reserved.

CitationS

This document

describes research sponsored by EPRI and Electricity Innovation Institute.

The publication is a

corporate document that should be cited in the literature in the following

manner:

THE INTEGRATED

ENERGY AND COMMUNICATION SYSTEMS ARCHITECTURE, EPRI,

Palo Alto, CA and Electricity Innovation Institute, Palo Alto, CA: 2003

{Product ID Number}.

This is the technical analysis

volume. It is based on the architectural principles introduced in Volume I

section 3. Specifically it discusses in several concise sections and some large

appendices the details of the analysis and technical results produced by the

project.

To summarize, this

volume discusses:

|

·

Architectural Principles

|

Relates

the principles introduced in Volume I to the specifics of the analyses

detailing them herein

|

|

·

Architectural Analysis

|

The

high-level strategies used to solve the problem, the different environments,

as well as common services, information models, and interfaces that were

identified as the results of this analysis.

|

|

·

Technology Analysis

|

A

comparative analysis of the universe of technologies available and which are

most closely aligned with IntelliGrid Architecture requirements

|

|

·

Deployment Scenarios

|

To

identify common integration scenarios and detail how IntelliGrid Architecture can be used to

accomplish them.

|

|

·

Benefits and Conclusions

|

A brief

summary of the benefits from a technology and interoperability standpoint.

|

|

·

Appendices A..E

|

Detailed

discussions of research by the team

|

This section

reprises the levels of abstraction framework presented in Volume I. By successively

abstracting the architectural analysis via these descriptions, the dominant

aspects of architectural issues were exposed and detailed.

·

Business needs

·

Strategic vision

·

Tactical approach

·

Deployment scenarios

A primary goal of

IntelliGrid Architecture project is designing a common architecture for utilities. This

section summarizes the principle modeling/analysis elements identified and

applied in IntelliGrid Architecture

|

·

Requirements

|

Common

industry requirements permit application constraints to be concisely and

precisely defined.

|

|

·

Services

|

Refining

applications into the services that can be combined in various ways to

achieve functional goals.

|

|

·

Information models

|

Common

building blocks of information exchanged to accomplish applications.

|

|

·

Interfaces

|

Low

level primitives that act as atoms to build the molecular common services of

IntelliGrid Architecture. The definition of these atoms facilitates the conveyance of the common

services across environmental boundaries that may utilize different

technologies.

|

This section

summarizes the results of detailed analysis performed on the following

important but often considered independent subjects crucial to collectively

achieving a robust architecture.

·

Enterprise management

·

Data management

·

Platform

·

Communications

·

Security

In deploying

applications using IntelliGrid Architecture, this section identifies the issues to consider and

proposed solutions in performing integration.

|

·

Field Device Integration

|

Shows

how 61850 and DNP3 based SCADA systems can be integrated to provide unified

rich model based device access and control.

|

|

·

Enterprise Management

|

Encompasses

the integration of a DMTF based Enterprise Management systems with TC 57

based utility systems.

|

|

·

Application Integration

|

How a

deployment of the CIM and GID

can be used to create a platform for legacy application integration.

|

|

·

Data Analysis

|

As

recovery of money spent on asset related operations is not guaranteed, it is

critical that asset related costs be managed wisely.

|

|

·

Energy Market Integration

|

Describes

how a utility might integrate Energy Market Transaction Servers with utility

operational systems.

|

This section briefly

summarizes how IntelliGrid Architecture facilitates the realization of the following benefits:

·

Reusable infrastructure

·

Interoperability through standards

·

Available off the shelf adaptors

·

3rd party applications

·

Extensibility

·

Incremental approach

The following

table identifies and summarizes the major sections in this volume:

|

Section 1

Principles and Requirements

|

The overall

principles and requirements used to develop the architecture and a brief

description of the problems it was intended to solve.

|

|

Section 2

Analysis

|

The high-level

strategies used to solve the problem, the different environments, as well as

common services, information models, and interfaces that were identified as

the results of this analysis.

|

|

Section 3

Technology

Recommendations

|

Discussion of the

implementation of the common modeling elements (services, information models,

and interfaces) using specific recommended technologies within the defined

set of environments.

|

|

Section 4

Deployment Scenarios

|

Guidelines and

examples of how the architecture should be deployed by utilities.

|

|

Section 5

Benefits

|

Summarized the

benefits of IntelliGrid Architecture from a technical standpoint

|

|

Appendix A:

Security

|

A comprehensive

discussion of security considerations for energy industry and related

communications

|

|

Appendix B:

Network Management Technologies

|

A discussion of

network management technologies and needs

|

|

Appendix C:

Resilient Communication Services

|

Discusses those

technologies and requirements that are necessary for robust communications

networks.

|

|

Appendix D:

Technologies, Common Services, and Best Practices

|

A detailed summary

of all the individual technologies, common services, and best practices

identified by IntelliGrid Architecture project

|

|

Appendix E:

Environments

|

A detailed

description of IntelliGrid Architecture environments

|

Document Overview.. v

Architectural Principles v

Architectural Analysis v

Technology Analysis vi

Deployment scenarios vi

Benefits and conclusions vi

What is in this volume. vi

Contents. viii

List of Figures. xi

List of Tables. xiii

Architectural Principles

and Requirements. 1

Enterprise Activities and

Domain Use Cases 3

Strategic Vision. 5

Data Management and

Exchange Issues 5

Abstract Modeling Tools 6

Abstract Use Cases 10

Domain Use Case

Requirements Analysis 13

Analyses of Abstract Use

Cases 20

Abstract Use Case

Requirements Conclusion. 39

Tactical Approach. 41

Environments 42

Technology Independent

Architecture. 43

Technology Assessment 45

Architecture Conclusions 46

Deployment Scenarios 48

Enterprise Layering. 48

Migration. 50

Architectural Analysis. 1

Requirements Analysis 1

Aggregated Requirements 2

Domain Use Case Analysis 6

Abstract Use Case

Analysis 6

Common Services 33

Common System and Network

Management Services 34

Common Data Management

and Exchange Services 35

Common Platform Services 37

Common Security Services 38

Common Information Models 40

Enterprise Management

Common Information Models 41

Power Systems Common

Information Models 42

Generic Interfaces 44

Namespaces 48

IntelliGrid Architecture Environments 51

Conclusion. 52

Technology and

Implementation Recommendations. 1

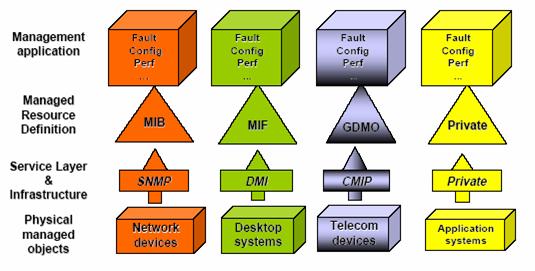

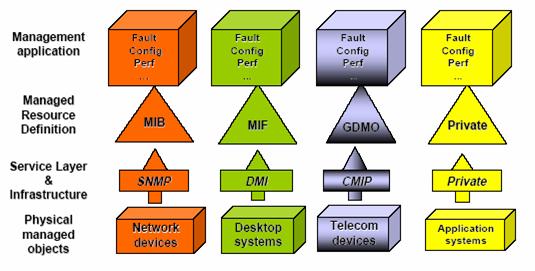

Enterprise Management

Technologies 2

Analysis of Enterprise

Management Technologies 2

Overlapping/ Harmonizing/

Missing Enterprise Management Technologies 6

Data Management and

Exchange Technologies 7

Horizontal Data

Management Technologies 8

Field Device Technologies 12

Control Center/Operations

Technologies 21

Energy Market Energy

Market Technologies 44

Platform Technologies 47

Analysis of Platform

Technologies 47

Communications

Infrastructure Technologies 49

Analysis of

Communications Infrastructure Technologies 49

Communication

Infrastructure Integration and Federation Strategy. 56

Overlapping, Harmonizing

and Missing Communications Infrastructure Technologies 60

Security Technology

Overview.. 61

Interdependencies 61

Service Specific

Technological Recommendations 63

Communication Technology

Specific Recommendations 64

Technologies that need to

be created. 64

Deployment Scenarios. 1

Introduction. 1

Deployment Scenarios 2

Field Device Integration

Deployment Scenario. 2

Enterprise Management and

Power Systems Integration Deployment Scenario. 8

Application Integration

Deployment Scenario. 15

Asset Management

Deployment Scenario. 20

Energy Market Integration

Deployment Scenario. 25

Benefits. 1

Reusable Infrastructure. 1

Standards 1

Off the shelf Adapters 1

3rd Party Applications 1

Extensible. 2

Incremental approach. 2

Conclusion. 2

APPENDIX A - SECURITY....................................................................... A-1

APPENDIX B – NETWORK MANAGEMENT TECHNOLOGIES............... B-1

APPENDIX C – RESILIENT COMMUNICATION SERVICES..................... C-1

APPENDIX D – TECHNOLOGIES, SERVICES, AND BEST PRACTICES

................................................................................................................. D-1

Appendix E - Environments.............................................................. E-1

Figure 1‑1: IntelliGrid Architecture Framework. 2

Figure 1‑2 Domain

Use Cases From List of Business Functions. 5

Figure 1‑3:

Integrated Energy and Communication Systems Architecture (IntelliGrid Architecture) RM-ODP Model 8

Figure 1‑4 Abstract

Use Cases from Domain Use Cases. 11

Figure 1‑5

Environments from Requirements. 12

Figure 1‑6 The IntelliGrid Architecture

Abstract Use Cases. 12

Figure 1‑7 IntelliGrid Architecture

Secure Enterprise Architecture. 21

Figure 1‑8

Enterprise Management and Power System Management Treated Independently. 22

Figure 1‑9

Integration of Enterprise and Power System Management 23

Figure 1‑10 Energy

Market Transaction Service Communication. 27

Figure 1‑11

Integration Of Device Data. 30

Figure 1‑12

Applications To Be Integrated. 31

Figure 1‑13

Application Integration. 33

Figure 1‑14 Example

Of Integrated Data. 34

Figure 1‑15 Field

Service Integration Example. 35

Figure 1‑16:

Representation of Security Domain Concept 36

Figure 1‑17

Point-to-Point Integration. 40

Figure 1‑18 Diagram

of Components, Services, and Interfaces. 42

Figure 1‑19 Adapters

Use. 44

Figure 1‑20

Technology-Independent Architecture. 45

Figure 1‑21 IntelliGrid Architecture

Analysis Logic Flow.. 47

Figure 1‑22 Utility

Integration Layering. 49

Figure 2‑1 Example

of eCommerce Message Flow.. 9

Figure 2‑2 eCommerce

Registry. 10

Figure 2‑3 Exposing

Server Data. 14

Figure 2‑4 Device

Information Exchange Model 15

Figure 2‑5

Application Integration Example. 16

Figure 2‑6 Message

Queuing. 17

Figure 2‑7 Publish

and Subscribe. 17

Figure 2‑8

Traditional Data Warehouse Architecture. 21

Figure 2‑9 Common

Information Model Based Data Warehouse. 22

Figure 2‑10 Example

Of A Data Warehouse Star Schema. 23

Figure 2‑11 Data

Warehouse Connected to a Message Bus. 25

Figure 2‑12 Data

Mart Proliferation. 26

Figure 2‑13 Example

Of A CIM/GID Based Data Warehouse. 27

Figure 2‑14 Example

of CIM/GID Warehouse Connected to a Message Bus. 28

Figure 2‑15 Example

Information Model 43

Figure 2‑16 Use of a

Common Exchange Model 44

Figure 2‑17

Applications Connect to Off the Shelf Middleware Via the Standard API’s. 45

Figure 2‑18 Applying

Technologies to Environments. 46

Figure 2‑19 Ways

That A Generic Interface Can Be Applied. 47

Figure 2‑20 Example

Namespace. 49

Figure 2‑21 Example

Generic Interface. 50

Figure 2‑22 Summary

of IntelliGrid Architecture Environments. 52

Figure 2‑23

Technology Independent Architecture. 53

Figure 3‑1 RDF,

RDFS, and OWL Build on Existing W3C Work. 9

Figure 3‑2 The Tree

of Knowledge Technologies. 9

Figure 3‑3 RDF

Example. 10

Figure 3‑4 RDFS

Example. 10

Figure 3‑5 OWL

Example. 11

Figure 3‑6 The

Evolution of DNP3 and IEC61850. 13

Figure 3‑7 Profile

Comparison: Directed Communications – Connection-oriented. 15

Figure 3‑8 Profile

Comparison: Multi-cast communications – Connectionless. 16

Figure 3‑9 Service

Comparison. 17

Figure 3‑10 IEC61850

Object Model 19

Figure 3‑11 TC 57

Standards. 21

Figure 3‑12

Simplified Fragment of CIM Power System Model 23

Figure 3‑13

Simplified Fragment of CIM Asset/Work Model 24

Figure 3‑14

Generalizations for power system resource and conducting equipment 25

Figure 3‑15 Defining connectivity for conducting

equipment 26

Figure 3‑16

Transformer model illustrating use of aggregation. 27

Figure 3‑17:

IEC61850 Models and Connections with IEC61970 Models. 28

Figure 3‑18 Proposed

Harmonization of 61850 and 61970 Information Models. 31

Figure 3‑19 Example

of a Full Mesh type of Namespace. 35

Figure 3‑20 Example

TC57PhysicalNamespace. 36

Figure 3‑21 Example

TC57ClassNamespace. 36

Figure 3‑22 Example

TC57ISNamespace. 37

Figure 3‑23

Traditional view of utility data. 37

Figure 3‑24 Customer

data within a CIM Network View.. 38

Figure 3‑25 Example

Full Mesh Namespace. 39

Figure 3‑26

Interface Lineage. 40

Figure 3‑27 Example

Of The Use Of The High Speed Data Access Interface. 41

Figure 3‑28 Example

Of The Use Of The Time Series Data Access Interface. 42

Figure 3‑29 CIM/GID

Based Data Warehouse. 42

Figure 3‑30

TC57Namespace Used As A Subscription Topic Tree. 43

Figure 3‑31 Model

Driven Message Definition. 44

Figure 3‑32 Migration To 61850 In The Substation

Communications Environment 58

Figure 3‑33 Next Generation SONET --- the

Multi-service Approach and its Benefits. 60

Figure 4‑1 Proposed

Harmonized IEC61850 and IEC61970 Information Models. 4

Figure 4‑2 DNP and

IEC61850 Based SCADA Networks. 4

Figure 4‑3 Mapping a

Device Model to the CIM.. 6

Figure 4‑4 Wrapping

DNP with a IEC61850 Based Namespace. 7

Figure 4‑5 Today's

Enterprise Management Architecture. 8

Figure 4‑6 A

Web-based Interface to the Enterprise Management System.. 9

Figure 4‑7 Web-based Enterprise Management Architecture,

which enables the Integration and Federation of Heterogeneous IntelliGrid Architecture management

technologies. 11

Figure 4‑8

Collaborations amongst multiple Management Systems. 11

Figure 4‑9 The

Internal Architecture of a WBEM Server and its interactions with Management

Application Clients and Managed Devices/Systems. 12

Figure 4‑10 Using A

Separate DMTF Integration Layer 14

Figure 4‑11 Using

DMTF CIM Only On The Enterprise Integration Layer 15

Figure 4‑12 More

Complete Integration. 15

Figure 4‑13

Application Integration Scenario. 15

Figure 4‑14 Control

Center Application Integration. 17

Figure 4‑15 Specific

GID Interfaces Used For Application Integration. 17

Figure 4‑16 Data

Synchronization. 18

Figure 4‑17 Keeping

the Mapping From Assets To the Power System In Sync. 19

Figure 4‑18 CIM/GID

Based Data Warehouse With Message Bus. 21

Figure 4‑19 Asset

Management Integration Example. 22

Figure 4‑20 Combined

Application Integration And Data Integration Architecture. 23

Figure 4‑21 GID

Interfaces Used to Integrate Applications and Data. 24

Figure 4‑22 ETSO

ebXML Or NERC eTagging Based Energy Market Server 26

Figure 4‑23 CME

Based Integrated Energy Market Systems. 27

Figure 4‑24 ETSO or

eTagging Based Integrated Energy Market Systems. 27

Table 1‑1 Principles

Applied to Each Level of Abstraction. 2

Table 1‑2 Summary of

RM-ODP Viewpoints. 7

Table 1‑3 Enterprise

Levels. 48

Table 2‑1: Relating

Security Processes to Functions and Services. 29

Table 2‑2: Relating

Security Processes to Functions and Services. 30

Table 2‑3: Primary

Services and the additional Security Services required to implement 30

Table 2‑4: Services

needed for Intra/Inter Domain Security. 32

Table 3‑1 Matrix Of

GID Functionality. 32

Table 3‑2: A

comparison of various Wireless Data Technologies. 55

Table 3‑3: Security

Services vs. Security Domain Usage. 62

Table 3‑4: Summary

of recommendations for Identity Establishment and Confidentiality. 64

This

page intentionally left blank.

If one were to

envision a utility where an IntelliGrid Architecture based architecture had been completely

adopted, one would see that it provides an ideal platform for higher-level

analysis across the entire enterprise. A

simple analogy might be that a utility executive ideally wants to drive a

utility much like a pilot might fly an airplane during cloudy conditions. In this case, a pilot can use just

instruments to get a complete picture.

That is, all required aspects of fight operation are visible via a well

laid out set of instruments. Similarly,

since most utility operational information and risk is not visible unaided, a

utility manager uses a set of software components as instruments to get a

complete picture. The instruments condense and summarize all the required

information. To actually direct the airplane, the pilot uses a mechanical

interface consisting of a limited set of pedals, switches, levers, and

“steering wheel”. How the instruments

and mechanical interface connect to the airplane and outside world is in

someway irrelevant to the pilot. One

could say that pilots only care that a set of inputs lead to a set of desired

results via comprehensive user interface.

Similarly, a utility executive wants a simple set of applications to

help direct the utility enterprise.

It is this unified comprehensible user

interface that IntelliGrid Architecture ultimately seeks to enable. This interface may exist at many levels of

the utility. For example, an executive

may be primarily concerned with balancing profit and risk whereas an

operational supervisor may be primarily concerned with balancing income and

reliability. However, it is clear that

the primary goals of IntelliGrid Architecture are to enable a comprehensive view of operations and

analytics in a secure manner.

These end-to-end analysis applications largely

don’t exist today because without a single unifying architecture they are too

expensive to develop. There are a great

variety of systems being used in a utility. In order to get a true picture of

the entire utility, these systems and data need to be integrated. Consequently, development of the end to end

analysis application can be hugely expensive.

Therefore in the past, even though the integration was technologically

feasible, it was not practical because the expense was prohibitive. It is IntelliGrid Architecture Team’s belief that only via

the deployment of a unified architecture and standard solutions can the new

analysis applications be economically deployed.

The IntelliGrid Architecture provides a unified architecture to realize this vision.

This

section starts with the requirements of utility industry applications that are

captured in the form of Domain Use Case. However, focusing on the applications

alone often bypasses the creation of common infrastructure capabilities that,

while burdensome to create for a single application, make it possible to

realize the myriad of functions that utility participants anticipate. In order

to focus on the capabilities of the shared architecture upon which secure

end-to-end looking applications can be built, we examine six essential

“abstract use cases” that describe the requirements of the common

architecture. This section shows the

derivation of these Abstract Use Cases and then describes the analysis done by

IntelliGrid Architecture team to develop the architecture.

As the analytical

phases of IntelliGrid Architecture project progressed, the team iteratively analyzed use cases

and derived solutions at subsequent levels of abstraction with increasing

detail. A useful analogy is to say that

the analysis began by starting from business needs, and gradually descending

towards a more nearly realizable solution via design goals, abstract design and

technology recommendations. We reprise

this analogy from Volume I section 3 in Figure

1.

Figure

1: IntelliGrid Architecture Framework

This figure shows the levels of abstraction

used during IntelliGrid Architecture Enterprise Architectural Analysis.

At each level of

abstraction, the team looked to discover commonality so that a unifying

architecture could be discovered. The

levels are described in Table

1.

|

Table 1 Principles Applied to Each Level of Abstraction

|

|

Level of

Abstraction

|

Principles

applied to each problem area at this level

|

|

Business Need

|

The Business

Needs of the power industry were identified and their information

requirements were assessed in the analysis of the utility operations

functions and management.

|

|

Strategic

Vision

|

The Strategic

Vision for the IntelliGrid Architecture reflects the ultimate objectives

for an information infrastructure that can meet all of the business needs,

including network configuration requirements, quality of service

requirements, security requirements, and data management and exchange requirements. This Strategic Vision is based on unifying:

–

Abstract Modeling

–

Security Management

–

Network and System Management

–

Data Management and Exchange

–

Integration and Interoperability

|

|

Tactical

Approach

|

The Tactical Approach uses Information

Models, Common Services and Generic Interfaces to provide a deployment

environment and technology independent solution for implementing

interoperable systems and for managing the migration from legacy systems

toward fully integrated systems.

|

|

Technology and

Best Practices

|

This section

describes how the Tactical Approach may be realized using implementable

technologies. It compares recommended

technologies and discusses their merits in regard to how well they support

the IntelliGrid Architecture.

|

|

Deployment

Guidelines

|

Provides

guidelines on how to apply the architecture in a layered manner. This is intended to help system designers

create migration plans in which legacy applications can be adapted to conform

to the architecture and new applications can be non-disruptively added.

|

The remainder of

this section discusses the principles applied at each level of abstraction in

more detail.

IntelliGrid Architecture is designed

to provide an architecture to be used across all of the utility. The first task that IntelliGrid Architecture Team undertook

was the creation of a comprehensive list of over 400 Enterprise

Activities. Simultaneously with the

development of this list, some common themes quickly became apparent as the

project team analyzed the requirements.

It was clear that the architecture would need to provide common

strategies in the following areas that underlay nearly all of the requirements

that were gathered:

·

Network and System (Enterprise) Management. For an area that is relatively mature in

commercial networks, the science of monitoring and controlling the

communications network itself is surprisingly unknown or at the very least,

primitive, in power system automation.

The key here will be to harmonize network monitoring technologies and

network object models with the functional equivalents in the power industry,

and to integrate both with security management.

·

Data Management and exchange. The sheer volume and variety of data required

in order to operate a power system within the Digital Society poses staggering

challenges in standardizing interfaces for reading, writing, publishing, and

subscribing to data. In this area, the

key solution will be to identify specific commonality and diversity of how data

is managed and exchanged.

·

Basic networking and connectivity

infrastructure. How are the myriads of

device and communications technologies to connect? In general, IP-based networks were the

obvious solution, but utility requirements posed unique requirements of

reliability, wireless access, changing configurations, and quality of service.

·

Security and access control. Deregulation and other effects of the Digital

Society are forcing utilities to rely on public networks provided by third

parties, communicate with their competitors, cross organizational boundaries,

and expand their communications networks inward to their own organizations and

outward to the customer. All of these

forces make the need for cyber-security ubiquitous in power system operations. Encryption and authentication technologies

abound, but the chosen strategy is to tailor security solutions to particular

problem domains, and link them together with shared security management

services.

The Team used the

list of requirements to select a set of functions from the list of Enterprise

Activities on the basis of their architectural significance -- that is the architectural sophistication

necessary to achieve their implementation.

Called Domain Use Cases, this small set of functions more intensively

analyzed consist of:

·

Wide Area Measurement and Control – in

particular, the requirements for developing a self-healing, self-optimizing

grid that could predict emergencies rather than just react to them, and

automate many reliability functions currently done manually or not at all.

·

Advanced Distribution Automation –the

challenges raised by the use of Distributed Energy Resources, renewable energy

sources, and the use of fault detection, fault location, sectionalization and

automatic service restoration over wide areas of the service territory and

multiple organizational boundaries.

· Customer

Interface – including the challenges of real-time pricing, demand response,

automatic metering, integration of the utility communications network with

building automation, and the requirements needed to integrate real-time data

gathered from the power network with business policies in order to securely

enable trading of energy in a deregulated environment.

This process

illustrated in Figure 2:

Figure

2 Domain Use Cases From List of Business Functions

The amount of data

being collected or capable of being collected is increasing exponentially. This

rapid expansion of data retrieval results from the fact that more field devices

are being installed and that these field devices are becoming more

"intelligent" both in what power system characteristics they can

capture, and also in what calculations and algorithms they can execute which

result in even more data.

As distribution

automation extends communications to devices on feeders, as substation

automation expands the information available for retrieval by substation

planners, protection engineers and maintenance personnel, and as more power

system asset information is stored electronically in Geographical Information

Systems and AM/FM systems, even more varieties and volumes of data will need to

be maintained and managed.

Data management is

a complex issue, encompassing many aspects of data accuracy, acquisition and

entry, storage and access, consistency across systems, maintenance, backup and

logging, and security. These are discussed in the following sections.

Data management

must address a complex set of issues that include the following services:

·

Validation of source data and data exchanges

·

Ensuring data is up-to-date

·

Management of time-sensitive data flows and

timely access to data by multiple different users

·

Management of data consistency and

synchronization across systems

·

Management of data formats in data exchanges

·

Management of transaction integrity (backup and

rollback capability)

·

Management of the naming of data items

(namespace allocation and naming rules)

·

Data Accuracy

·

Data Acquisition

·

Data Entry

·

Data Storage and Access Management

·

Data Consistency across Multiple Systems

·

Database Maintenance Management

·

Data Backup and Logging

No single

cross-industry technology addresses all of these issues, but multiple solutions

and best practices are available for different aspects.

The first

principle of IntelliGrid Architecture architectural vision is the principle of abstract

modeling techniques, as expressed in the following quote:

“There are limits to human ability to

understand [truly complex systems] and to solve large sets of system

equations. The problem must be broken

down or divided into a series of smaller problems that can be solved. Modeling is one of the proven and

well-accepted engineering techniques that simplify the system, so that we can

better understand the system being developed.

System simplification is achieved through the introduction of levels of

abstraction, which allow the modeler to focus on one particular aspect of the

system at a time.”

So that the

abstractions used in IntelliGrid Architecture analysis may be more clearly understood, the

IntelliGrid Architecture has been developed and refined using an international

standard for architecture design call the “Reference Model for Open Distributed

Processing” framework, RM-ODP. RM-ODP is the reference model for defining open,

distributed software system architectures. It was developed with extensive

input from the technical community and represents a substantial body of

knowledge. It was therefore the natural selection as a means for developing and

expressing IntelliGrid Architecture.

RM-ODP is a formalized approach to developing

abstract models of system functions, which helps to ensure that all

requirements are identified and analyzed before the functions are

implemented. It breaks down the analysis

and description of an architecture into five largely complimentary

viewpoints. Each viewpoint answers a

different set of questions. These

viewpoints are summarized in the following table.

|

Table 2 Summary of RM-ODP

Viewpoints

|

|

Viewpoint

|

Question

|

Contains

|

|

Enterprise

Viewpoint

|

Who is

involved?

|

Information

about the various participants and functions implemented in the energy

industry

|

|

Information

Viewpoint

|

What

information must be exchanged?

|

Models of

information exchanged, agreements between parties, and roles and

relationships that underpin the data of industry functions

|

|

Computational

Viewpoint

|

How is this

information going to be exchanged?

|

The mechanics

related to information exchange i.e. a discussion of the interfaces required.

|

|

Engineering

Viewpoint

|

Where is the

information located and where will it be sent?

|

The

configuration for where to physically deploy clients, servers, databases, and

subsystems in terms of what component is deployed on which network for

example. This view describes the

partitioning of a solution and where the pieces reside and closely

corresponds to IntelliGrid Architecture Environments.

|

|

Technology

Viewpoint

|

Which

technologies and best practices are to be used to accomplish this?

|

Actual

technology and best practice solutions that can be used to carry out the

functions

|

It is important to note

that RM-ODP is a framework for describing architectural

views, and provides a reference model for developing architectures, but it is

not an architecture itself.

In the IntelliGrid Project, the main purpose for using RM-ODP was to ensure that all architecturally

significant requirements were identified for existing and future power

system operations functions.

The Unified Modeling

Language, UML, provided both the abstract language and computer tools that the

team employed for expressing its analysis within the RM-ODP framework. These two approaches, RM-ODP and UML, complemented each other in the

development of the architecture:

·

As a framework, RM-ODP is very abstract and does not call out the

use of a particular notation nor does it have tools that are directly linked to

its concepts.

·

UML has very good tools available, but is not an

architecture standard and is not usually used at the same level of scope as RM-ODP.

The two can be used

together because they are complementary and support similar levels of

abstraction. Even though there is not a direct match between RM-ODP terms and UML terms, constructs can be

developed in UML to realize RM-ODP

concepts. The widely available UML tools

can then be used to create the diagrams and underlying database that is

necessary for understanding the complex interactions of power system functions

across multiple areas. Both are useful

standards as both embody the following concepts:

·

UML is a technology neutral way of specifying use

cases, data, and software components.

RM-ODP separates technology specifics into the

Technology View. IntelliGrid Architecture uses UML to

specify a technology neutral architecture.

·

UML is a deployment neutral way of specifying

data and software components. RM-ODP separates deployment specifics into the

Engineering View. IntelliGrid Architecture uses UML to

specify deployment neutral architecture that can be applied to a variety of

environments.

In conclusion, UML and

RM-ODP have enabled IntelliGrid Architecture Team to design an

architecture that is flexible and can be applied to a diverse set of

environments and technologies. UML and

RM-ODP provide a standard language so that the

design of the architecture can be communicated to others.

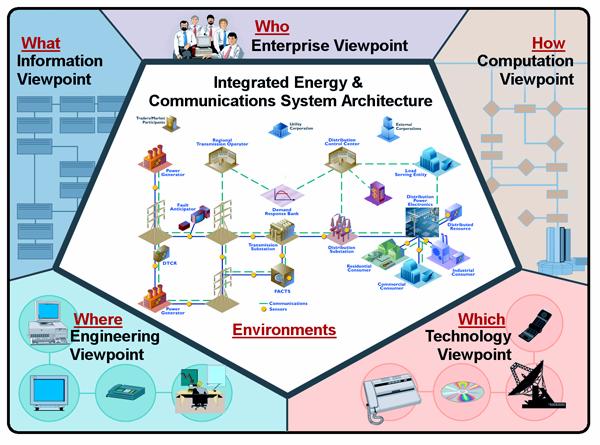

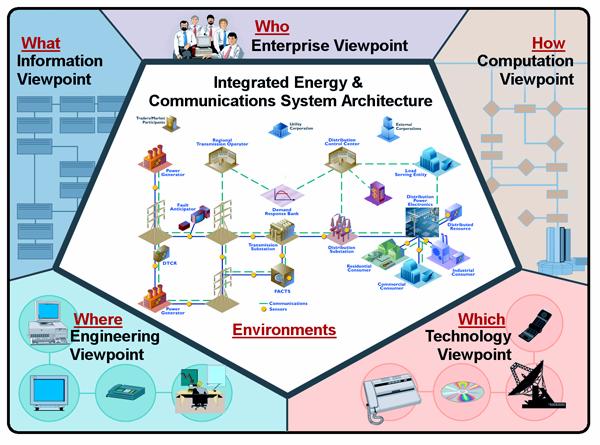

Figure

3: Integrated Energy and Communication

Systems Architecture (IntelliGrid Architecture) RM-ODP Model

The abstract

modeling process used during IntelliGrid Architecture project leading from RM-ODP to the final architecture is outlined below.

In general, the UML concept of “Use Cases” was used to capture stakeholder

requirements, and a UML tool called Magic Draw was used to generate diagrams

that expressed the architecture in the five RM-ODP viewpoints.

1.

UML Use Case

Template: The highest level UML construct for describing a function is the

Use Case, which can map more or less into the RM-ODP Enterprise Viewpoint. Since not many people

have UML tools, people in the IEC and IEEE have been describing functions using

a “Use Case Template”. A Use Case

Template is a MS Word document that captures the UML concepts of Actors, Roles,

Associations, Classes, and other UML constructs. This Use Case Template could

then be used to enter the information into a UML tool, like Rational Rose or

Magic Draw. The Use Case Template doesn’t have any standard format, but usually

includes sections to:

·

Describe the function in narrative form

·

Identify the Actors and Information Exchanged

·

Identify the steps involved in exchanging

information between Actors.

2.

UML Use Case

Template to IntelliGrid Architecture Domain Template: In IntelliGrid Architecture project, the team renamed

the Use Case Template the “Domain Template” and modified it in a number of

ways:

·

Added a number of additional fields and

requirements beyond those of a traditional UML Use Case in order to capture

more RM-ODP concepts, such as policies and contracts

between Actors.

·

Added the RM-ODP concept of Common Services (services that

can be used by many different functions) as well as a spreadsheet to capture

common requirements across all Use Cases.

·

The IntelliGrid Architecture team identified a number of common

Environments, discussed later in section 2.

Each environment has different configuration, performance, security and

data management requirements. Each

“step” in a Use Case was assigned to a particular environment for use later in

determining appropriate technologies to use for that step.

3.

Importing Domain

Templates into UML Tool: Domain

Experts filled out Domain Use Cases using the Domain Template. These were then

imported automatically into the Magic Draw UML tool. The resulting diagrams

became a tool for IntelliGrid Architecture team to further analyse the requirements captured

in the Domain Templates.

4.

Results from the

RM-ODP

Analysis Become the Technology and Deployment Neutral Reference Architecture:

As stated above, RM-ODP is a reference model for defining a distributed

system architecture for a particular software function. However, the purpose of

IntelliGrid Architecture project is not to develop a single architecture for one specific

function; the purpose of IntelliGrid Architecture project is to develop a Reference Architecture

for all

power system functions. The

relationships captured in the UML tool can be used as a database for

determining the appropriate approach to any power system communications

problem. In this sense, the database

becomes the architecture.

Through analysis

of the Domain Use Cases, the team identifies a limited set of common functions

necessary to implement each Domain Use Case. In order to capture this common

functionality IntelliGrid Architecture team derived a set of Abstract Use Cases. The Abstract Use Cases are:

·

Integration of Enterprise Management – The

integration of software and hardware component management functions with power

system functions

·

Integration of Utility Wholesale and Retail

Market Operations - The integration of market operation functions with power

system functions

·

Device Integration – The integration of

heterogeneous power system devices.

·

Application Integration – The integration of

heterogeneous power system applications to meet operational needs.

·

Data Integration – The integration of

heterogeneous power system data to meet analytic needs.

·

Security Integration – The integration of

security across multiple domains.

The process of

using Domain Use Cases to derive Abstract Use Cases is a key simplification

used by IntelliGrid Architecture Team. That is, the

Team realized that it would be impossible to analyze all conceivable Domain Use

Cases within a limited timeframe and budget.

Instead, the Team realized that they had to pick a much smaller set of

“architecturally significant” Domain Use Cases. These “architecturally significant” use

cases were then used to create more generalized Abstract Use Cases as

illustrated in Figure 0‑4.

Figure

0‑4 Abstract

Use Cases from Domain Use Cases

This second set of

use cases is abstract because they are not tied to any particular utility

function. However, the Abstract Use

Cases are more useful in deriving detailed components of an architecture

because they allowed the Team to abstract away the specifics of Domain Use

Cases and permitted the team to focus on the commonality and diversity of all

Domain Use Cases. The commonality is

expressed as a set of common modeling elements and the diversity is expressed as

a set of environments and technologies as shown in Figure 5:

Figure

5 Environments from Requirements

The abstract set

of use cases is illustrated in Figure6 below:

Figure6 The IntelliGrid Architecture Abstract Use Cases

This section

discusses the derivation of the Abstract Use Cases from the Domain Use Cases.

ADA

Advanced

Distribution Automation (ADA) involves software applications in the control

center supplemented by applications and functions implemented in field

equipment. The control center applications provide the global analysis of the

distribution system state and capabilities and are the overarching functions in

control of distribution system operations, while the field equipment

applications provide local information and control.

The ADA

applications in the control center rely heavily on data from many different

sources, and going to different systems:

·

SCADA system for real-time data from field

equipment and control command to field equipment, including both substations

and feeder equipment

·

DER

equipment, either directly or indirectly through DER Aggregators

·

Energy Management System (EMS) for transmission information and

·

Geographical Information System (GIS) and/or Automated Mapping and Facilities

Mapping (AM/FM) systems for power system facilities data and physical

connectivity data

·

Customer Information System (CIS)

·

Work Management System (WMS)

·

Distribution Planning Systems

·

Market Operations systems

The primary

architectural requirements for ADA are focused on data management. Correct,

available, and timely data are crucial to the ADA function operating properly.

However, since data comes from many different sources and since the systems

acting as these sources usually are provided by different vendors, the

coordination, synchronization, integration of systems, and mapping of data

elements across these systems is a major problem.

In addition,

because real-time control of the power system is a major aspect of ADA, both

security and network management are critical to safe and reliable operation of

the power system. Therefore the main requirements are those associated with the

“Critical Operations DAC” Environment and the “Intra-Control Center”

Environment ( a complete list of IntelliGrid Architecture Environments can be found in Appendix

E):

1.

Security Requirements

·

Provide Authorization Service for Access Control

(resolving a policy-based access control decision to ensure authorized entities

have appropriate access rights and authorized access is not denied)

·

Provide Information Integrity Service (data has

not been subject to unauthorized changes or these unauthorized changes are detected)

·

Provide Audit Service (responsible for producing

records, which track security relevant events)

·

Provide Credential Renewal Service (notify users

prior to expiration of their credentials)

·

Provide Security Policy Service (concerned with

the management of security policies)

·

Provide Single Sign-On Service (relieve an

entity having successfully completed the act of authentication once from the

need to participate in re-authentications upon subsequent accesses to managed

resources for some reasonable period of time)

·

Provide User Profile and User Management

(combination of several other security services)

·

Provide Security Discovery (the ability to

determine what security services are available for use)

2.

Network and System Management Requirements

·

Provide Network Management (management of media,

transport, and communication nodes)

·

Provide System Management (management of end

devices and applications)

3.

Data Management Requirements

·

Support the management of large volumes of data

flows

·

Support keeping the data up-to-date

·

Support extensive data validation procedures

·

Support keeping data consistent and synchronized

across systems and/or databases

·

Support timely access to data by multiple

different users

·

Support frequent changes in types of data

exchanged

·

Support management of data whose types can vary

significantly in different implementations

·

Support specific standardized or de facto object

models of data

·

Support the exchange of unstructured or

special-format data (e.g. text, documents, oscillographic data)

·

Provide discovery service (discovering available

services and their characteristics)

·

Provide conversion and protocol mapping

Therefore, if one

abstracts from these functions, one can say that ADA from an architectural

perspective requires:

·

Integration of many different systems developed

by different vendors for differing requirements

·

Development of a platform that spans many

different systems, applications, and databases

·

Management of data across multiple systems,

including data consistency and synchronization with short timeframes

·

A system to manage configuration and change

·

Security integration across multiple security

domain

Customer Interface

With the advent of

deregulation, the interface between ESP and consumer has become more important,

because customers can (and have) switch(ed) energy providers and because they

can now be an additional source of revenue if new energy services can be sold

to them, or if the utility rights within the customer premises can be used to

sell access to other businesses.

The expansion of

system operations coordination and control down to the end user level creates

one of the key justifications for IntelliGrid Architecture. An enterprise-wide architecture such

as IntelliGrid Architecture offers a tremendous opportunity for improved operational efficiency,

improved control of customer processes based on supply system conditions, use

of customer-owned and operated generation and power quality improvement

technologies as part of overall system management and to achieve the required

levels of reliability and power quality at the end user level. In as far as the other side of the equation,

implementation of load control/demand response programs provides utilities with

another key tool to ensure power system stability and security.

Applications

related to customer interface must be coordinated closely with distribution

automation and distributed resource applications, as well as market

operations. Key applications include

·

Real time pricing

·

Load management

·

Residential customer applications, such as load

control in response to real time pricing incentives

·

Direct customer energy management and load

control during system emergencies

·

Automatic evaluation of and recommendations for

increasing energy efficiency based on profiles of the customer site and loads

·

Control and performance evaluations for

residential generation

·

Power quality assessments and control.

Also critical are

commercial and industrial (C&I) applications such as commercial customer

participation in energy markets through aggregation of backup generation and

energy management, participation in ancillary services (such as volt/var

control, harmonic control, and reserve generation), real time commercial

facility power quality assessment solutions integrated with the distribution

system operation and integration of real time information concerning system

power quality and reliability.

By nature,

customer interface/consumer services applications share close coordination with

distributed automation, distributed resource, and market operation

applications. The current status of

utility industry restructuring, as well as the current state of technology,

necessitate that many consumer service applications rely on distributed

automation and distributed energy applications and their underlying

communications requirements.

Furthermore, consumer interface is playing a key role in market

operations where customer load and/or onsite generation may be aggregated and

utilized to bid into energy markets and key customers may have sufficient

requirements for power to play a role in bulk power trading, scheduling, and

supply scenarios.

The range and

scope of customer interface/consumer service applications is complex and

growing. The possibility of customers

and ESPs managing load down to the appliance level could generate requirements

with a level of granularity not seen in any other domain. However, at the present time and taking both

short and long term scenarios into consideration, the domain analysis covered

three key applications:

·

Real time pricing

·

Utility administered load control

·

Consumer side data collection

RTP is important because it requires

communication between the customer and the ESP in terms of the ESP providing RTP signals to the customer and the customer

potentially providing bids and forecasts back to the ESP.

Quality of service including high availability and timeliness of data is

crucial. There are large numbers of

customer with sensitive information on pricing and usage; therefore security is

a key consideration. Since future power

system operating scenarios will undoubtedly involve more two-way communication

with the customer and the ESP

as well as increased customer sensitivity to pricing data, this is a

significant area for requirements analysis.

Utility

administered load control is where, instead of responding to price signals, the

signal comes directly from the ESP

to control customer loads. This

application covers a wide range of issues, especially security across

organizational domains and the need for two-way communications to confirm load

control actions for future advanced demand responsive systems.

Data collection

from the consumer side is seen as critical to facilitate more active

involvement by customers in the interface to and participation in market

operations and energy management. In

terms of power quality monitoring, the information is intermittent and

sometimes infrequent, but timely, communication and notification is very

important when events do occur.

Analysis of the

requirements associated with these requirements showed trends that were common:

·

Database integration and management is critical,

with utilization on both customer and ESP systems and even inside one system, with

multiple installations and different purposes.

For instance, for ESPs, there may be one database for customer billing

data, a database for pricing data, and a separate database for operational

data, i.e., customer participation in RTP or load control programs. Some of the data requirements are low in

volume (such as usage data) while others may be high in volume with detailed

information (such as power quality monitoring data).

·

There are multiple levels and requirements of

security and in addition to ESP

security issues, which are significant and substantial, there are issues

relating to the privacy of customers and desire to secure customer data and

facilities from unauthorized access and cyber attack. Securing the consumer interface frequently

requires different technologies than securing ESP-specific functions.

·

Communication and bandwidth requirement run the

gamut from telephone line to wireless to fiber.

Much equipment related to customer metering has not been upgraded to

take advantage of the state of the art, so legacy systems and disparate data

transportation mechanisms require an enterprise-level approach to systems

integration.

·

Customer services factor in financial

transactions. Much as with market

operations, money will be changing hands in applications such as power trading

and real time pricing. This brings into

play a different set of considerations than the traditional ESP operations.

·

The amount, scope, and time frame for

transactions are constantly evolving. As

more customer services are identified and as the technology matures and

emerges, the far reaching vision of a consumer portal, where a customer can

manage every aspect of their interface with the electrical grid and energy

usage, comes into play. This

necessitates the ability to add and address requirements for technologies that

are still in the development stage.

As can be seen,

while this domain has some specialized requirements, it shares requirements

with several other domains regarding wholesale and retail market operations

integration, application integration and data integration.

Wide Area Measurement and Control

The goal of Wide

Area Measurement and Control (WAMAC) is to synchronize and coordinate the

measurements of the state of the power system across large geographic areas, to

model and simulate system behavior in real-time, to anticipate fast-changing

system conditions, and to support multiple automation and control response

capability. The core functions of WAMAC include:

·

Synchrophasor calculation –Historically, voltage

and current phasors were measured against a local reference angle, complicating

the state estimation process of determining system wide phasors against a

common reference. Synchro-phasors are measured over a wide area against a

common reference angle and the information can be made widely available, which

will greatly simplify the state estimation problem.

·

Dynamic model update – WAMAC depends on accurate

models of the state of the system and the capability of the system to respond

to control actions. WAMAC requires accurate and timely updated models.

·

Real time state measurement and security

assessment – WAMAC functions include steady-state and dynamic analyses and risk

assessment that consider multiple set of independent and dependent

contingencies while applying probabilistic models of power system components.

The increased dimension and limited time intervals of these function creates

communication and computational challenges.

·

Real-time proactive/preventive dynamic control –

The decision making for the proactive/preventive dynamic control actions

implies analyses of multiple complex scenarios with possible conflicting

results. Fast simulation based on adequate modeling is an imperative

requirement. The timely and reliable implementation of these control actions

poses communication challenges for the WAMAC system.

·

Emergency control – Emergency control focuses on

preservation of power system operations without endangering the power

equipments. WAMAC should perform multiple remedial control schemes in adaptive

and timely manners providing integrity and generation-load balances of power

system areas.

·

Automated restoration – The ideal goal of

restoration is fully automated self-assembly of the entire power system. In

this case, WAMAC must coordinate the re-synchronization of separated

transmission lines, reconnection of affected distribution systems and customer

loads. WAMAC must interact with Advanced Distribution Automation functions to

leverage DERs in support of the restoration actions.

The power system

is the largest and most complex system created by man. As it has grown, human

management and control of it has proved quite challenging. Moving to the future, an information based

Wide Area Measurement And Control system will be needed to provide

instantaneous (10s of ms) measures of the system conditions to enable dynamic

modeling of the various complex power system phenomena. Such requirement

involves gathering and coordinating the data from large areas and various

organizations. The creation of synchrophasors provides the technical means to

make unified measurements over a wide area.

The implementation over of wide area communications allows consolidation

of these measurements through what is known as Phasor Data Concentrators

(PDCs). The devices that create the synchronized measurements, commonly known

as Phasor Measurement Units (PMUs), often provide synchronized measurements

that span organizational and divisional boundaries. Organizational boundaries

would include sharing aggregated data between utilities. Data communication must only occur with those

entities authorized to receive the synchronized data. As much of the synchronized data indicates

instantaneous system state, this communication of this data must be secure for

some period of time as it could be used by power marketers in the pricing of

electricity. The present NERC agreement

between organizations that share data requires confidentiality of operations

data for 8 days. Divisional boundaries

include the issuance of control information to distribution companies and can

potentially migrate directly to direct load control in the home. This type of application requires the utmost

in secure and authenticated communications.

To validate

existing system models and to dynamically update them is another challenge for

WAMAC. Connectivity of the areas affected by a power system event needs to be updated

in the model in real time. Input data such as specific impacts of load inertia

on frequency-related phenomena or impacts of saturated devices are critical to

the model. Lack of these critical data is an issue to WAMAC and, as such, high

availability of the synchronized data is required.

WAMAC must

accomplish many complex decisions and control actions within milliseconds to

second time intervals. There are three security states in the power system

operations: normal, emergency and restorative. The timing requirements for each

of these states are different. The dynamics of loads, generation and system

topology drive the timing requirements for the normal state. Any decision made

when the system is in the normal state should be valid in the time interval

between the consecutive runs of the particular application. In the emergency

state, the time for the decision-making and its implementation is considerably

shorter than the normal state. In the emergency state, the power system

conditions could change dramatically in the 10s of millisecond range. The

amount of data that need to be processed by WAMAC is substantial in as much as

it is continually streamed. Failure to respond to the emergency conditions

could result in system instability and possibly lead to large scale blackouts.

The same timing requirement applies to the restoration actions due to the need

for simultaneous execution of control actions. Again, any control action need

to be secure and issued with the proper authority.

In the control

mode, WAMAC needs to coordinate the implementation of the power system state

machine. In the general case, an operational decision in power system

management consists of several control actions, each of which takes time to

finish. In many cases, a subsequent action depends on the successful completion

of the previous action. No harmful operation conditions should occur during the

intermediate steps.

To summarize, the

successful implementation of WAMAC relies on IntelliGrid Architecture to provide:

·

Automated information support/data aggregation

appropriate for the timing and complexity of the process to be controlled

o

Reliable, high-speed, secure, point to

multi-point communications

o

Automatic system configuration

o

Automated configuration and remote control of

executing devices or their modes of operations (settings)

o

High-speed application data access

o

Integration of different information systems

·

Secure information sharing among different

organizations

o

Authentication of control commands

o

Data confidentiality

·

Integration and coordination of centralized and

distributed intelligence

o

Integration of different control systems, such

as EMS, DMS and Market operation system

o

Cross-domain communications with Advanced

Distribution Automation applications

·

Utilization of modern information technologies to

solve data-overwhelm issue, to enhance data availability, and to provide modern

data visualization

Conclusion of Domain Use Case

Analysis

This section has

shown that in order to deploy the functionality describe in the Domain Use

Cases in an economical way, one has to deploy the functionality described in

one or more of the Abstract Use Cases.

However, besides deriving the Abstract Use Cases from Domain Use Cases,

one can also derive the Abstract Use Cases via a more theoretical

discussion. The following section

includes this discussion as well as analysis of the Abstract Use Cases for

derivation of the IntelliGrid Architecture.

On an abstract

level, one can state that IntelliGrid Architecture must support just two capabilities:

·

Provide support for the operation of existing

and future utility functions.

·

Provide support for the integration of existing

and future utility functions.

Note that support

for the operation of existing functions is known and currently implemented by

utilities. Note that support for the

operation of future functions is largely addressed by the development of new

applications and technologies. As IntelliGrid Architecture

is not an application or technology development project, this is out of scope. This leaves support for the integration of existing

and future utility functions as the primary issue to be solved by IntelliGrid Architecture. Also, since an architecture for non utility

specific functions will be driven by cross industry groups such as the W3C,

IEEE, or even major information technology vendors, IntelliGrid Architecture more narrowly focuses

on specializing these cross industry architectures to utility specific

functionality.

One can state that

integration issues related to utility specific activity are limited to:

A.

Integration of applications and data for

operational and analytic purposes.

B.

Integration of devices as well as hardware and

software services for operational and managerial purposes.

C.

Secure integration of applications, data, and

devices within a utility, between a utility and an energy market partner, and between

a utility and an operational partner.

The operational partner means an external entity that works with a

utility to meet operational as opposed to market driven goals.

The common terms

for Group A include:

I. Application

integration

II. Data

integration

The common terms

for Group B include:

III.

Device integration

IV.

Enterprise management

The common terms

for Group C include:

V. Energy

Trading

VI.

Security Integration – especially security

across security domains

The IntelliGrid Architecture Team

believes that these six abstract use cases provide complete coverage of utility

specific functionality required for the complete analysis for comprehensive

architecture.

The goals of IntelliGrid Architecture Enterprise Architecture include:

·

Establish an architecture to integrate all of

the utility enterprise - from Energy Market partners to backend systems to

devices.

·

Enable comprehensive and unified views of the

utility enterprise to allow creation of new applications that can look across

the utility and focus on end-to-end profitability and reliability.

·

Establish Comprehensive Security Architecture

that accommodates integration of autonomous security domains.

· Create

migration plan whereby legacy applications can be adapted to conform to the

IntelliGrid Architecture and new application can be non-disruptively added.

Figure 7 below portrays the different elements than need to be

integrated by IntelliGrid Architecture.

Figure 7 IntelliGrid Architecture Secure Enterprise Architecture

This section

describes the Abstract Use Cases in more detail and why these tasks play an

important part of enterprise integration and the development of higher-level

profitability and reliability focused analysis applications

Integration of Enterprise

Management and Power System Services

This section

describes the challenges facing the integration of Enterprise Management,

sometimes called communications System Management or Network Management, into

the power system.

In order to create

a truly reliable power system, IntelliGrid Architecture team needed to consider more than just

power system services. Modern utilities

monitor and control the power system via a vast network of

communication-enabled devices.

Traditionally, the data related to power system operation and

communication system operation has been treated independently, as illustrated

in Figure 8.

Figure

8 Enterprise Management and Power System Management Treated

Independently

However, operation

of the power system is now completely dependent on successful operation of the

communication system. It is clear that

in order to achieve a comprehensive view of end-to-end reliability one needs to

integrate communications system and power system analysis as shown below:

Figure 9 Integration of Enterprise and Power

System Management

System/network

management, also referred to as enterprise management, is the task of ensuring

that the systems and the network provide the required services with the

specified quality of service to the users and other systems. Most enterprise

management architectures use agent-manager relationships where the agents,

residing on the managed elements, provide management information, such as

alerts or performance measurements, to the manager.

The manager reacts

to these messages by executing one or more actions such as:

·

Operator notification

·

Event logging

·

System shutdown

·

Automatic attempts at system repair.

Management

entities also poll managed elements, automatically or upon user request, to

check the values of certain attributes of the managed device. Agents have

information about the managed devices in which they reside and provide that

information (proactively or reactively) to management entities within an

enterprise management system using a management protocol.

Typically,

enterprise management functions are performed on the following managed

elements:

·

Network devices such as routers,

switches, hubs, customer premises equipment and communication links;

·

Computing resources such as substation

automation systems and data concentrators; servers such as Market Transaction

Servers;

·

Software services such as SCADA, EMS, or GIS components, as well as database management

systems;

·

Service and business functions such as RTP customer pricing service, security and

operational policy servers; and

·

Storage area networks.

In IntelliGrid Architecture, the team

adds the power systems network-aware devices such as IEDs and RTUs to the

above.

The International

Organization for Standardization (ISO) has defined the following network

management functions for fault, configuration, accounting, performance and

security (FCAPS) management. Although defined for network management, these

functions can be generalized to systems and applications management.

Fault Management Function- Fault

management detects, fixes, logs, and reports network problems. Fault management

involves determining symptoms through measurements and monitoring, isolating

the problem, fixing the problem through reconfiguration, reset, technician

dispatch, etc.

NOTE: In this context, Fault Management does

not refer to power system faults, but faults in the communications network.

Configuration Management Function - Configuration

management, complements fault, involves maintaining an inventory of the network

and system configuration information. This information is used to assure

inter-operability and problem detection. Examples of configuration information

include device/system operating system name and version, types and capacity of

interfaces, types and version of the protocol stacks, type and version of

network management software, etc. Configuration management complements the

other functions fault, performance and security management.

Accounting Management Function -

Account management keeps track of usage per account, billing, and ensures

resources are available according to the account requirements.

Performance Management Function - The

task of performance management involves measurements of various metrics for

system/network performance, analysis of the measurements to determine normal

levels, and determination of appropriate threshold values to ensure required

level of performance for each service. Examples of performance metrics include

network throughput, user response times, CPU, memory and line utilization.

Management entities continually monitor values of the performance metrics. An

alert is generated and sent to the network management system when a threshold

is exceeded

Security Management Function - Security

management is to control access to network resources according to security

guidelines. Security manager partitions network resources into authorized and

unauthorized areas. Users are provided access rights to one or more areas.

Security managers identify sensitive network resources (including systems,

files, and other entities) and determine accessibility of users and the

resources. Security manager monitors